Generate Apps Locally for Free: App.build Now Supports Open Source Models

Run it locally with Ollama, LMStudio, and OpenRouter

app.build is evolving into something new

App.build now supports open weights LLMs via Ollama, LMStudio, and OpenRouter – enabling you to generate complete applications end-to-end without cloud API dependency or associated costs.

Why Run App.build Locally?

Zero API costs

Cloud LLM APIs can become expensive fast during extended coding sessions. Even our small dev team can burn through hundreds of dollars in API costs during a single intensive testing session. That is acceptable for a company and less so for hobbyists, and we want more enthusiasts to hack around our framework.

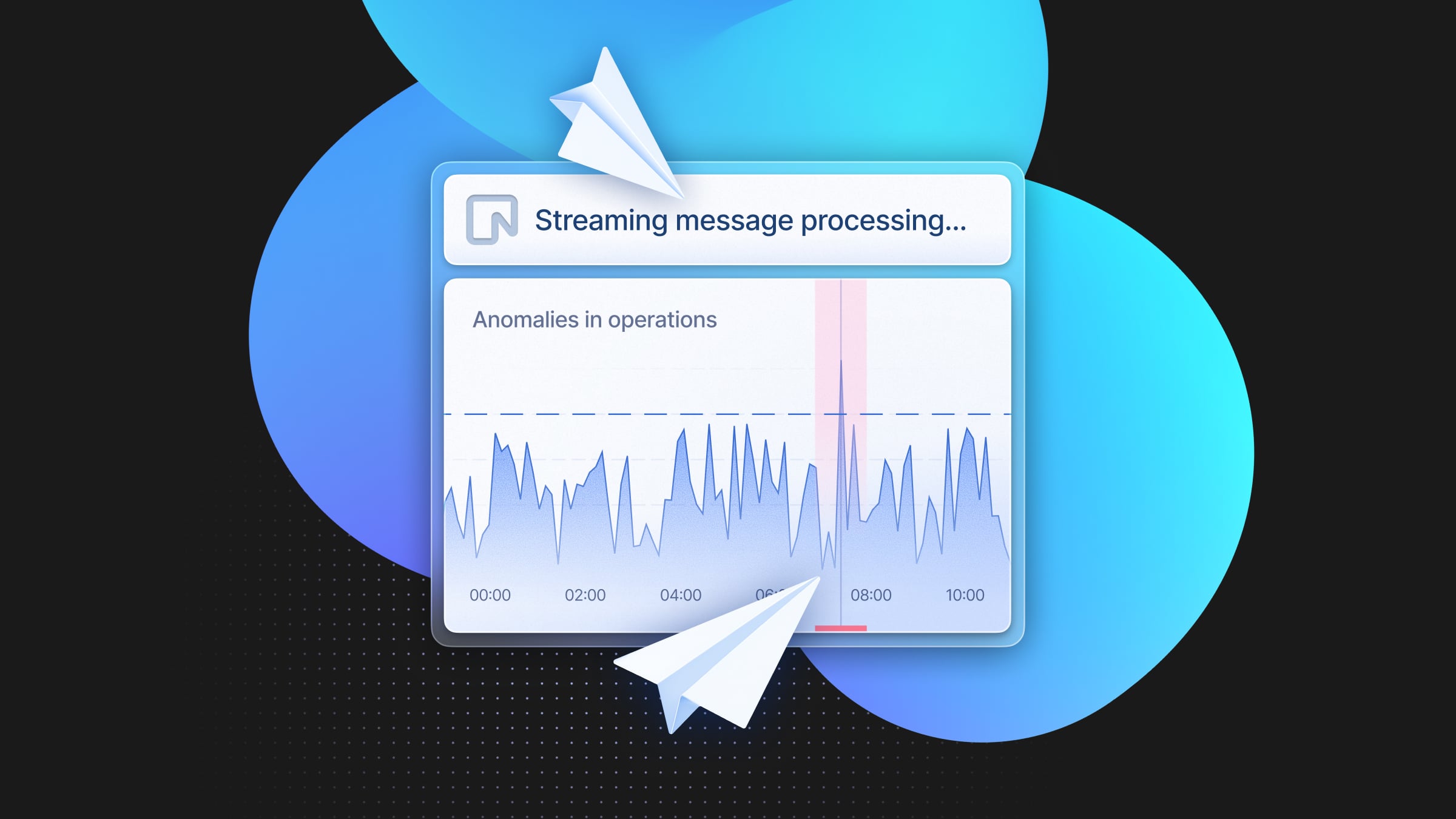

No rate limits or outages

API-based tools frequently hit rate limits during intensive development sessions, especially when major providers struggle with the load. Getting 5xx errors from Anthropic isn’t uncommon.

Data privacy and control

Running locally means your code, ideas, and proprietary information never leave your machine – addressing GDPR, HIPAA, and IP protection concerns without relying on cloud provider policies.

Because it’s cool!

Using open weights models, running everything locally, contributing to the open source ecosystem – sometimes the philosophical reasons matter too. 🙂 We’re having a lot of fun working on app.build, and want to align it with our personal preferences.

The Open Weights Models Situation

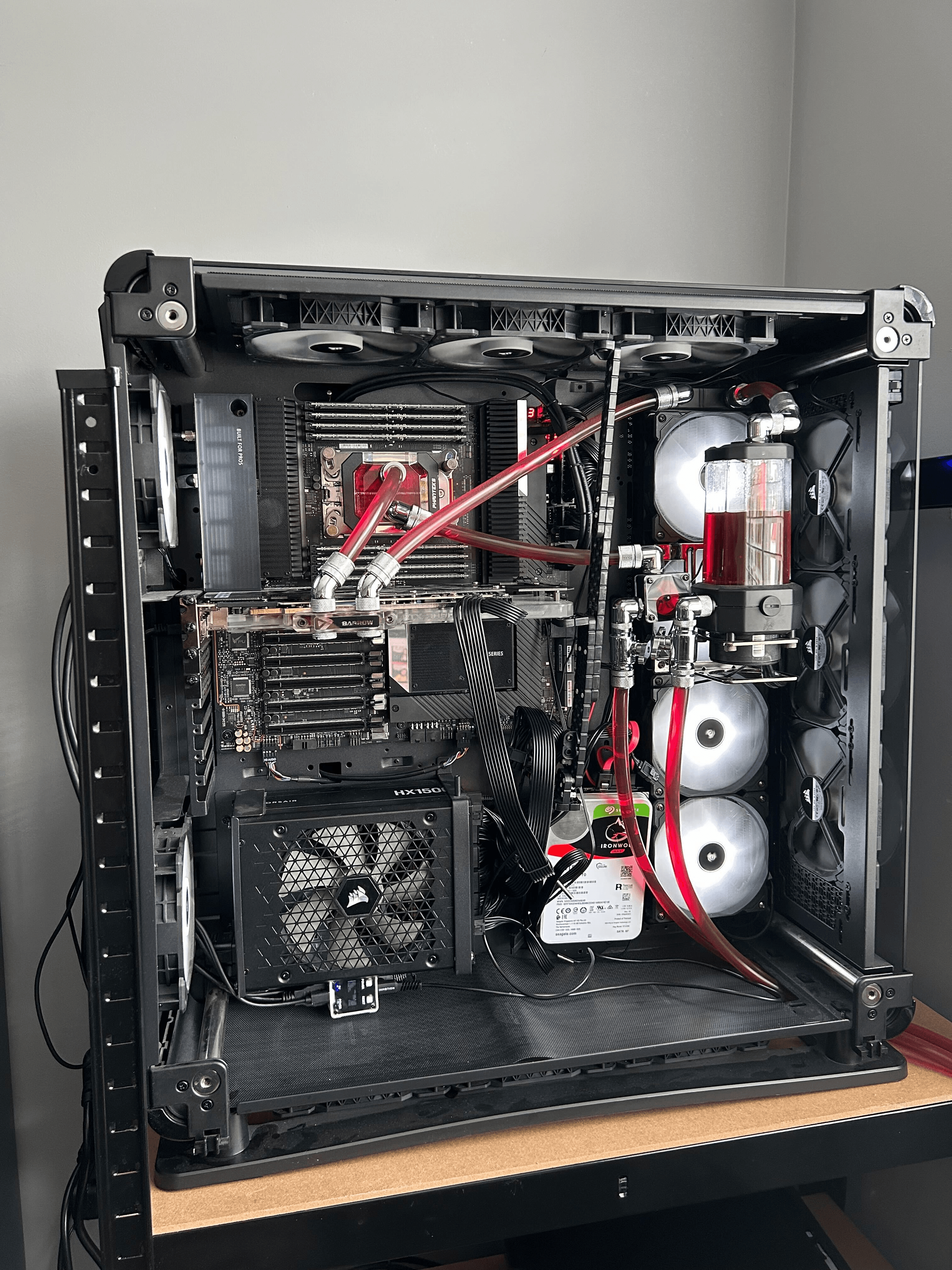

Hardware available for pet projects

Consumer GPUs like RTX 3090/4090 with 24GB VRAM can run capable 8B models at full precision or heavily quantized 30B models (e.g. Qwen3-30B3A). Apple M4 Macbooks with up to 48GB unified memory offer excellent local inference performance with even more flexibility.

E.g. MoE (mixture of experts) models like aforementioned Qwen3-30B3A or recent GPT-OSS-20B by OpenAI can be inferred with very reasonable speed on such laptops (~60-70 tokens per second in 4-bit quantized versions, which is comparable to some popular commercial APIs). Among the other upcoming hardware for local LLM inference we should mention Nvidia DGX Spark, Asus Ascent GX10; there are also rumors on next gen of Mac Studios being a great choice for local LLMs.

OpenRouter: best of two worlds

Not everyone is a hardware enthusiast and wants to fill their apartment with GPUs. It should not be a blocker for experiments!

OpenRouter is a service allowing users to query multiple LLMs via unified API, including both closed models and open weights ones served by various infra providers, including Cerebras and Groq – companies with unique serving tech allowing for hyperfast inference. Unlike local inference, OpenRouter can’t guarantee absolute privacy, though they claim not to store users’ prompts unless stated explicitly for some models.

Using OpenRouter allows you to try open-source models that are barely available for typical home setup and require 100s of GB VRAM. It comes with a very reasonable pricing: e.g. Kimi K2 – open-source model comparable to Claude Sonnet can be inferred for $0.6/$2.50 for 1M input/output tokens (compare it with $3/$15 for Sonnet). The model has 1T parameters, which is an absolute blocker for most self-serving adepts.

What’s the situation with available models?

We don’t have a holistic benchmark to evaluate the whole model variety (and are working on closing this gap). However, our early evaluation shows large open source models being approximately on par with closed source alternatives. Honorable mentions: Qwen3-Coder, Kimi K2.

Smaller open source models do not really perform well enough at the moment. Building an app in a single shot with a model served on your local laptop is a very probabilistic gamble: you may get lucky, but more likely the model gets confused and fails to converge. Some of the failures are associated with malformed tool calling that is likely to be addressed by the inference software. However, overall performance on agentic tasks – that are crucial for app.build needs – is still lagging behind closed models.

We’re certain the next generation of open source models runnable on consumer hardware will get closer to production-ready experience. For now, we consider them applicable for less autonomous use cases rather than generating full apps in a single run.

Getting Started

We added new environmental variable for the agent configuration forcing it to use non-default models:

LLM_BEST_CODING_MODEL=openrouter:qwen/qwen3-coder

LLM_UNIVERSAL_MODEL=openrouter:z-ai/glm-4.5-air uv run generate "make me another todo app but make it stylized to Roman Empire because I think about it too often"For local inference use ollama:vendor/model and lmstudio:host variables (lmstudio: with no host works too – we like reasonable defaults).

The Bottom Line

Local LLM support gives you the freedom to experiment, prototype, and build without vendor lock-in, cost anxiety, or data sharing concerns. Combined with the rapid improvement of open source models, local app generation is not quite a viable alternative to cloud APIs, but getting there quickly.

Try app.build locally or use our managed service for free. If you’re interested in building something similar, check out the code (and contribute!).