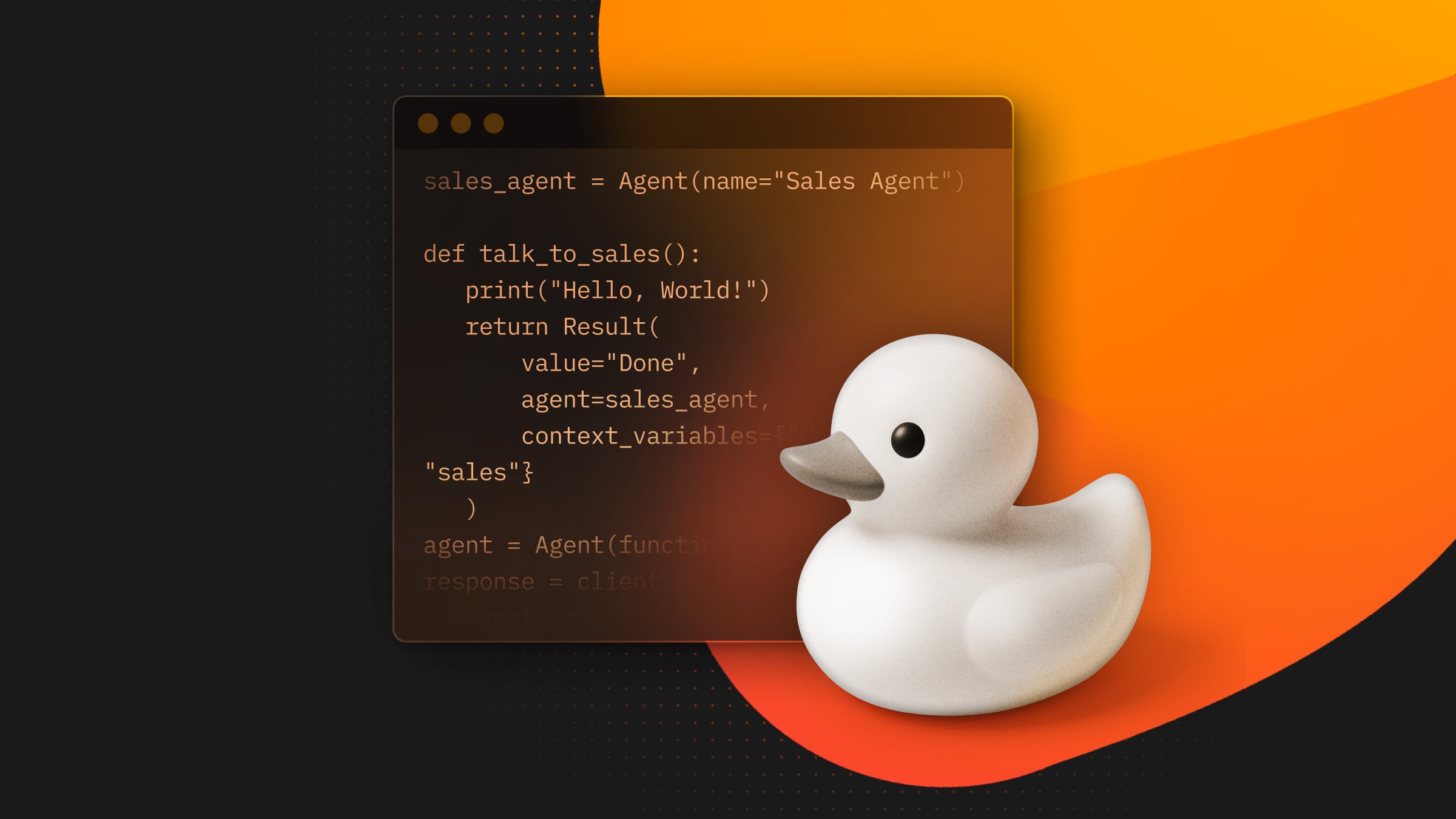

Have you ever explained a bug or issue you’re having to someone, only to discover the solution mid-conversation, as if simply describing the problem helped you catch your own mistake? This is actually quite common and has been used by software engineers for decades, with the official name of rubber duck debugging.

By explaining your issue, even to an inanimate object, you’re forced to evaluate your assumptions and logic from a different perspective. Discussing your problem with another person can add even more value, since they might ask basic questions that help uncover overlooked assumptions and can keep the conversation going. Ideally, you’d always talk to a colleague familiar with the domain, but interrupting a coworker every time you hit a snag won’t make you very popular in the office.

Extending the Rubber Duck Experience in Cursor

This is where I think AI-integrated IDEs, like Cursor, have a very strong use case as a debugging partner. Cursor can act like a better rubber ducky, giving you more than the silent receptiveness of an inanimate object. With the strength of the codebase indexing, it can highlight logical gaps or misinterpretations of your own code, or ask meaningful follow-up questions that help you think more deeply about the issue.

This approach is significantly better than the usual AI debugging methods, like copy-pasting the error message and instantly applying the response, for many reasons. In the short term, you might solve the issue 9/10 times using a “send-and-pray” strategy, but you can quickly lose sight of your codebase if you apply fixes you don’t understand, making subsequent change and future debugging harder as the amount of blindly accepted code increases.

More importantly, however, you will slowly lose the ability to learn, effectively solve problems, and critically think. By having the LLM act as a coworker that probes your understanding and guides your line of questioning, you can reap the benefits of an integrated IDE without chipping away at the skills you rely on for more complex problems.

When compared to more structured AI-driven debugging, where you would provide the full error stack, relevant code snippets, and detailed context, I’d argue the conversational approach still comes out ahead. With complex bugs, these structured reports are typically still not enough, and lead to incorrect recommendations. By contrast, having a conversation gradually builds quality context around the issue, ultimately improving both your understanding and the suggestions provided by Cursor.

Productivity Gains

Given the frequency at which debugging occurs, rewriting almost the same prompt each time isn’t very productive. Instead of repeatedly prompting the agent manually, I created a Cursor ruleset in a .cursor/rules/rubberducky.mdc file. Then, when I wanted to initiate a session, I could reference it with @rubberducky.mdc, and the conversation would start according to my predefined standards. This also has the added benefit of making sessions more consistent, allowing me to tweak the prompt over time.

Another huge help is setting up voice-to-text (like WisprFlow or SuperWhisper), so you can speak to the agent rather than needing to type ideas out. To me, speaking aloud makes the interaction more natural, improves my thought clarity, and gives a much higher words-per-minute throughput compared to typing.

Here, it’s as simple as opening the agent tab, pulling in your ruleset, and off you go.

Rubber Ducking Isn’t Dead, It’s Just Upgraded

Obviously, not every issue requires a full blown conversation, and there’s a time and a place for simply throwing the error into the agent chatbox, briefly reading the response, and applying it if it seems right.

You shouldn’t, however, only do this. Rubber ducky debugging helps build quality context, deepens your understanding of the changes you’re making, and prepares you to tackle more difficult issues.